What is The BERT Algorithm Update?

BERT is Google’s newest neural network-based technique for natural language processing (NLP) pre-training. BERT stands for Bidirectional Encoder Representations from Transformers. Let’s break down the acronym.

Bidirectional – In the past, Google, as well as many other language processors, have simply looked at queries in a single direction, either left to right or right to left (usually depending on the language being searched). However, as we all know, much of what we do in language, requires us to analyze everything being said as it relates to everything else in a sentence. The “B” in Bert provides the Natural Language Processor, which helps BERT better understand what parts of the query relate to the other parts.

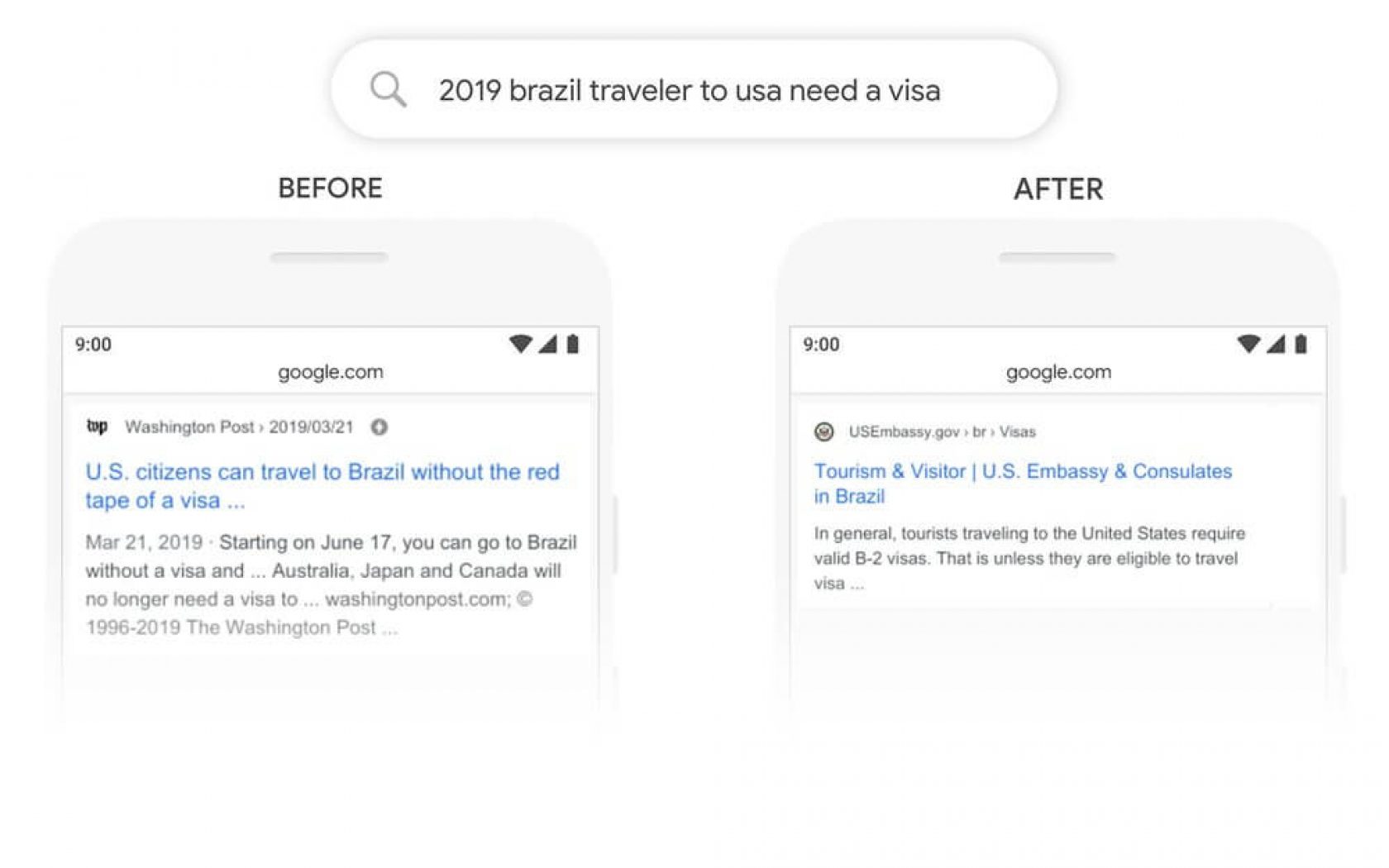

Transformers – These are words, which are glue words in a sentence, and in the past have been mostly ignored by Google’s search algorithm. In the past, when someone would search for “Parking on a hill with no curb,” Google would look at the primary words “Parking,” “hill,” and “curb.” Previously Google negated the critical transformer: “no.” As you can see below, the old Google search would share SERP results for parking on a hill with a curb, ignoring the transformers. With BERT being applied to the existing Google search algorithm, the search engine displays better results for the end user.

Image source: Google

BERT has learned not only how to recognize individual words, but also what those words mean depending on how they are put together, as well as the context of the words. BERT is a huge step forward in how machine learning deals with natural language.

Basically, BERT is trying to do more than just understand our words — it’s trying to understand the intent of those words, and that makes it very important to the future of search.

What We Know About The BERT Google Algorithm Update so Far

• Noble Studios noticed significant changes happening to specific SERP categories during October 2-6, as we believe Google was doing testing with BERT, which we are referring to as BERT-lite.

• Most organizations, which have been working with Noble Studios for SEO for at least two years, saw very minimal changes; but newer clients, which are just getting started with SEO saw significant SERP position changes during October 2-6 and October 19-30.

• Top categories affected by the BERT algorithm change on desktop SERPs were News, Jobs, Education, Finance, Law, Government, Reference and Autos & Vehicles.

• Top categories affected by the BERT algorithm change on mobile SERPs were News, Sports, Jobs, Education, Finance, Business, Industrial, Internet & Telcom, Government, Law and Arts & Entertainment.

• Google states that BERT does not replace the existing language algorithms or RankBrain, but it can be used in conjunction with them as an additional analysis layer.

• BERT also impacts featured snippets in a huge way, and not just for English language featured snippets like with core search, but many different languages. Noble Studios has already seen major changes in the SERPs for other languages like Spanish, Japanese, French and German. The most common English SERP changes have been in the United States, Canada, Great Britain and Australia.

• Google says BERT currently impacts about 10% of all queries (approximately 560 million queries a day). Google also stated that BERT is its most significant step forward for its search algorithm in the past 5 years.

Image source: Google

• Google is looking at searches as being more conversational. The addition of People Also Ask (PAA) to search results showed that Google knows that, in many cases, they have not offered exactly what a user is looking for and provides a list of commonly asked topics on what Google understands the search was about.

Can You Optimize Pages For The BERT Algorithm?

Yes and no.

If you offer a service or product, then yes, you can optimize almost any page by looking at what Google currently sees as being necessary for the query, and then make sure you offer the best answer or product details for the end user. Remember, Google wants its users to get the answer they want the first time.

If you were ranking for a keyword, which your page really did not answer well, or for a product you really don’t offer, then no, you will not be able to optimize the page to get back the ranking. That’s a good thing, though. Users will only get to the pages they are genuinely looking for, and you should see bounce rates decrease for those pages, which may have been ranking for erroneous keywords. Also, people who once ranked above you for something they did not offer or provide the answer to, will most likely drop lower in the SERP over time, giving you additional traffic from those searches.

Top 10 Winners From The BERT Algorithm Change

• http://www.mercurynews.com

• https://www.excelhighschool.com

• https://grinebiter.com

• https://www.lavanguardia.com

• https://www.gnc.com

• https://www.frenchbulldogrescue.org

• http://paperdollspenpals.com

• https://www.minipocketrockets.com

• http://leoncountyso.com

• http://howicompare.com

Top 10 Losers from the BERT Algorithm Change

• https://gasbuddy.com

• http://www.frenchbulldogrescue.org

• http://emergencyvethosp.com

• http://www.remax.com

• https://www.firstcitizens.com

• https://www.ellicottdevelopment.com

• https://ccbank.us

• https://subtract.info

• https://firstcitizens.com

• https://rvshare.com

The Future of the Google Search Algorithm

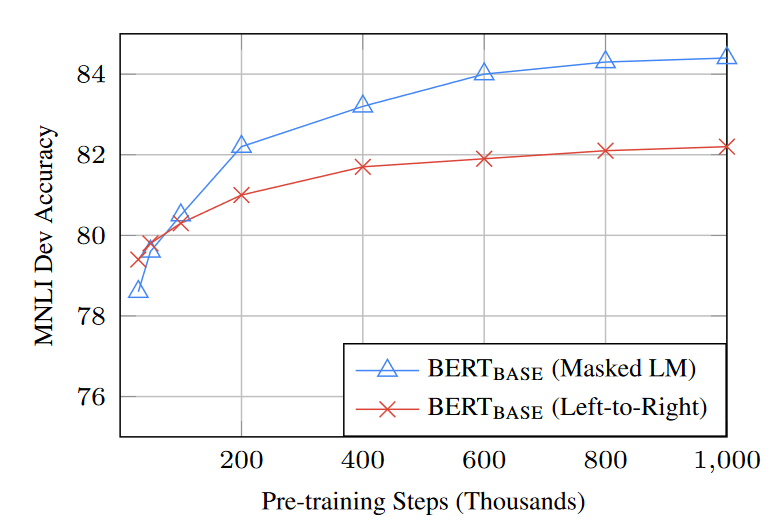

State-of-the-art advancements in NLP such as applying bidirectional transformers offers a huge step forward for providing the best results for search queries.

RoBERTa: A Robustly Optimized BERT Pretraining Approach is what the Facebook AI team is working on. Preliminary data shows that the performance of BERT can be substantially improved by training the model longer, with bigger batches, over more data. By removing the next sentence prediction objective; training on longer sequences and dynamically changing the masking pattern applied to the training data, Facebook hopes to extend the 2% gain Google has already seen with BERT.

Image source: Google

The addition of BERT and RoBERTa are paving the way for even more advanced breakthroughs to happen in the coming years. SEO professionals will now need to learn a whole new slew of terms and operations, in order to better equip their sites for the future of search.

New Terms for SEO

• Synthetic Data

• GLUE (General Language Understanding Evaluation)

• SQuAD (Stanford Question Answering Dataset)

• SWAG (Situations With Adversarial Generations)

• Greedy Decoding

• Label Smoothing

• Loss Computation

• Data Loading

• Iterators

• BPE/ Word-piece

• Shared Embeddings

• Attention Visualization

Where to Find More About BERT

BERT’s model architecture is a multi-layer bidirectional Transformer encoder based on the original implementation described by Ashish Vaswani in Advances in Neural Information Processing Systems and released in the tensor2tensor library.

To better understand how the language model pre-training is effective for improving many natural language processing tasks, read the “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” from the Google AI Language team.

Google’s AI team also does a qualitative analysis which reveals that the BERT model can and often do adjust the classical NLP pipeline dynamically, revising lower-level decisions based on disambiguating information from higher-level representations.